Personal Access Grid nodes for Immersive Collaboration

Supported by NOAA

HPCC 2005

Albert J. Hermann,

Christopher Moore, Kate Hedstrom and Phyllis Stabeno

This page summarizes our development of a method for lifelike collaboration between individuals at remote locations using the Personal Access Grid and related software, combined with stereo-immersive hardware (e.g. the GeoWall). Major findings and demonstrations under this project include the following:

- An easily installed videoconferencing client was indentified for Windows XP platforms. This client is available through the web via the Virtual Rooms Videoconferencing System (VRVS)

- Through the use of dual cameras and the GeoWall, we conducted a live demonstration of stereo-immersive telepresence at the NOAA HPCC Network working Group FY 2005 Annual Review Meeting

- A powerpoint presentation based on our findings is available at http://www.pmel.noaa.gov/~hermann/agstereo/agstereo.ppt

Project Summary

This project was designed to investigate the

feasibility of low-cost teleconferencing and desktop sharing over the internet

through a variety of platforms. There are several windows-based tools now

available for videoconferencing among two or more individuals; these include

the ubiquitous Microsoft Messenger and many commercial products. Open-source

community efforts include Access Grid (AG) software and hardware; these are

designed to work with both Windows and Linux platforms. A recent version of

Access Grid software allows videoconferencing through the use of individual PCs

and simple webcams, i.e. a Personal Access Grid or PIG.

We began our investigations with the

Personal Access Grid. As with full-fledged Access Grid nodes, PIG software

includes desktop, web page, and powerpoint

sharing among conference participants. Video and audio services rely on the

open-source Video Conferecncing Tool (VIC) and

Reliable Audio Tool (RAT). The PIG software performed admirably on our Windows

and Linux platforms once installed; however, we found the registration and

installation process is presently cumbersome enough to deter non-specialists

(that is, the average scientist with a PC). Additional web searching revealed a

closely related but more user-friendly gateway to AG-like services, the Virtual

Rooms Videoconferencing Service (VRVS). VRVS was initially developed by

and for the Physics community, but is now available to other researchers. The service uses Reflectors (i.e. remote

hosts), interconnected using unicast tunnels and multicasting,

to route video and audio streams. As with the AG, VRVS uses VIC and RAT at its core; however, it has a simple,

JAVA-based front end for use through web browsers. Registration is fast and

simple, and the user is prompted where necessary for one-click installation of

VIC, RAT, and other tools. Windows, Linux, and Mac-OSX platforms are supported.

In practice, we found that Windows XP was the simplest and most reliable

platform for installation and use.

Users log on through the VRVS web site:

and thereby connect to available meeting venues

(that is, virtual meeting places). Meeting venues may be reserved in advance

and password protected.

The cameras used by participants may be

simple USB webcams, or more sophisticated analog videocameras

plugged into a video capture device. A single user may invoke two cameras at

once; this is useful in stereo visualization as noted below. Participants in a

conference may easily share web pages. Sharing of full desktops is possible but

was ultimately not pursued in our project due to firewall and security issues.

The ability of internet-based

videoconferencing to penetrate NOAA firewalls is an ongoing issue. In the initial

phases of this project we found that VRVS software worked easily through the

PMEL firewall, whereas toward the end of the project we found that video

service was inoperable without special requests to the system manager.

A second aspect of this project explored the

feasibility of immersive videoconferencing - that is, seeing the other

participant(s) with binocular vision. We explored several avenues in this

realm. The easiest approach appeared to involve two cameras, spaced

approximately the distance between left and right eyes. If video images can be

sent from both cameras, the viewer has the option of delivering these two

images separately to his/her own two eyes, thus achieving immersive

visualization. Dedicated dual-camera systems were considered for this purpose,

but did not appear cost-effective relative to simple webcams.

A major potential complication in this

approach is the need for synchronization of the two images. If the left and

right images are not synchronized, stereo vision ranges from painful to

impossible. Initially it was believed that inter-camera synchronization would

be poor over the internet. Hence, we purchased a single videocamera

with a "beam splitter" attachment, which placed left and right eye

views on the left and right halves, respectively, of each frame. This indeed

provides perfect sychronization, but the tradeoff was

a very narrow field of view (that is, half the usual width of the screen, the

very opposite of a “wide screen" experience).

Suprisingly, recent trials with two simple USB cameras

connected through VRVS have in fact demonstrated excellent synchronization. All

video feeds from conference participants show up as separate windows in VRVS,

which may be resized and moved around the viewer’s desktop. Through previous

HPCC support, we had acquired stereo immersion hardware based on dual

projectors and polarizing filters (the GeoWall; see

http://www.pmel.noaa.gov/~hermann/vrml/stereo.html). We connected this system

to a PC with dual-monitor support, and sent the left and right camera windows

to separate projectors. Through the use of this hardware, viewers were able to

experience a vivid stereo telepresence of any VRVS

participant with dual cameras.

Our experience with VRVS on various

platforms included the following:

1. Windows XP desktop PC – The VRVS client

had very fast response. Logitech Orbit USB cameras exhibit excellent

performance, both singly and in pairs (for stereo). No synchronization problems

were encountered between cameras.

2. Windows XP Tablet PC – The VRVS client

works through Wi-Fi at public locations but has very

slow response. A poor quality built-in camera on the PC hampered effective

videoconferencing, but the major bottlenect was the Wi-Fi bandwidth

3. Handheld PC – Despite repeated attempts, we

couldn’t get VRVS-provided video or audio service to perform on this platform.

Limited bandwidth and processor speed are likely factors.

4. Linux PC – Persistent software issues

hampered our use of this platform. VRVS and PIG software (VIC, RAT, etc.) were

generally more difficult to install and use on Linux than on Windows.

Technical issues for VRVS included the

following:

1. Firewall problems have increased over the

duration of this project, due to changes in NOAA security policy. Recently, we

have required services from the system manager to allow video service through a

specific port on the client platforms.

2. Default audio and video settings of VIC

and RAT in VRVS were adequate, but modifying VIC for greater video bandwidth

radically improved performance. For audio, both headset and non-directional

microphones worked well. In RAT, setting the talk volume to its minimum value

resulted in the clearest signal. At times, audio and video signals got out of

synch (audio lags video), but this was easily fixed by restarting the RAT

session.

Expenditure Summary

- Videocamera with tripod $1000

- Beam splitter for videocamera $400

- USB video capture device and USB 2.0 card for Windows PC $100

- 3 Webcams $300

- Windows XP Tablet PC $900

- Handheld PC with camera $600

- Linux PCs $3000

Future Directions

The VRVS system appears to be the most user-friendly conferencing tool. We were pleasantly surprised at the ability of a simple dual-webcam system to produce a vivid stereo-immersive effect, and will encourage others to deploy this simple device for immersive conferencing. Further drops in pricing for dedicated stereo cameras are anticipated. We plan further demonstrations of this approach at conferences and schools. We will continue to explore low-cost stereo visualization options, including methods which avoid the use of auxiliary eyewear.

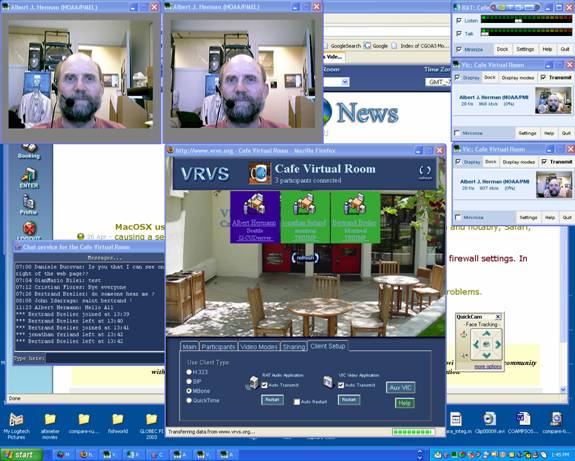

Figure 1. A VRVS session with stereo cameras.